Visual Acuity

Humans rely on the foveola, a small region approximately half the width of a thumb

at arm’s length, for seeing fine spatial detail. Outside this region, it is well established that

many visual functions progressively decline with eccentricity. Within the foveola, instead, vision

is believed to be approximately uniform; however, this hypothesis has never been rigorously tested,

primarily because of the very presence of microscopic eye movements. In fact, mapping foveal vision

is an extremely challenging task; these eye movements, which include incessant drifts and

microsaccades, prevent isolation of small retinal regions. Fixational eye movements effectively

homogenize measurements across adjacent retinal locations.

Spatial Representations

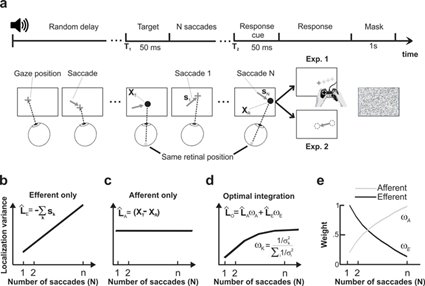

Keeping track of objects' locations across eye movements is a complex problem: even if an object is at a fixed location in space, its retinal projection shifts away from the fovea as the gaze moves. Thus, in a crowded scene, it is impossible to exactly determine where an object is solely based on the retinal input. How are spatial representations established and updated across saccades? This issue has been intensively investigated, and it is commonly accepted that the process depends on extra-retinal information about eye movements. But it has remained unclear whether eye movements proprioception is also implicated in addition to efferent signals and how different signals are combined with the visual input. Our research addressed this issue using a natural task that generated contrasting predictions for different updating mechanisms. By comparing experimental data to these theoretical predictions, we have shown that the mechanisms of spatial localization rely on the statistically optimal combination of afferent, efferent, and retinal signals (Poletti et al, 2013, The Journal of Neuroscience). Importantly, these findings provide evidence for the involvement of eye movements proprioception in the updating of spatial representations.

More recently, using virtual reality coupled with high-precision head-tracking my research extended the investigation to the mechanisms underlying spatial localization in the presence of head movements. The goal of this study is to understand how the information about active head movements is integrated into the spatial updating process.