Computational Vision

A fundamental goal of our research is to unveil the fundamental computational strategies adopted by the visual system. To this end, our work combines experimental and theoretical approaches. We use neural modeling to explore and refine theoretical predictions, with models of neurons at various stages of the visual pathways often exposed to reconstructions of the retinal input signals experienced by subjects in our experiments.

Much of our research is driven by space-time encoding, a theory of how the visual system encodes spatial information that we have developed together with our collaborator Dr. Jonathan Victor.

Space-time encoding

Establishing a representation of space is a major goal for sensory systems. Spatial information, however, is not always explicit in the incoming sensory signals. In most modalities, it needs to be actively extracted from cues embedded in the temporal flow of receptor activation. Vision, on the other hand, starts with a sophisticated optical imaging system that explicitly preserves spatial information on the retina. This may lead to the assumption that vision is predominantly a spatial process: all that is needed is to transmit the retinal image to the cortex, like uploading a digital photograph, to establish a spatial map of the world. However, this deceptively simple analogy is inconsistent with theoretical models and experiments that study visual processing in the context of normal motor behavior. Our theory proposes that, like other senses, vision relies heavily on temporal strategies and temporal neural codes to extract and represent spatial information.

Stable visual representations, a moving visual world

Like a camera, the eye forms an image of the external scene on its posterior surface where the retina is located, with its dense mosaic of photoreceptors that convert light into electro-chemical signals. At each moment in time, all spatial information is present in the visual signals striking the photoreceptors, which explicitly encode space by their position within the retinal array. This camera model of the eye and the spatial coding idea have long dominated visual neuroscience.

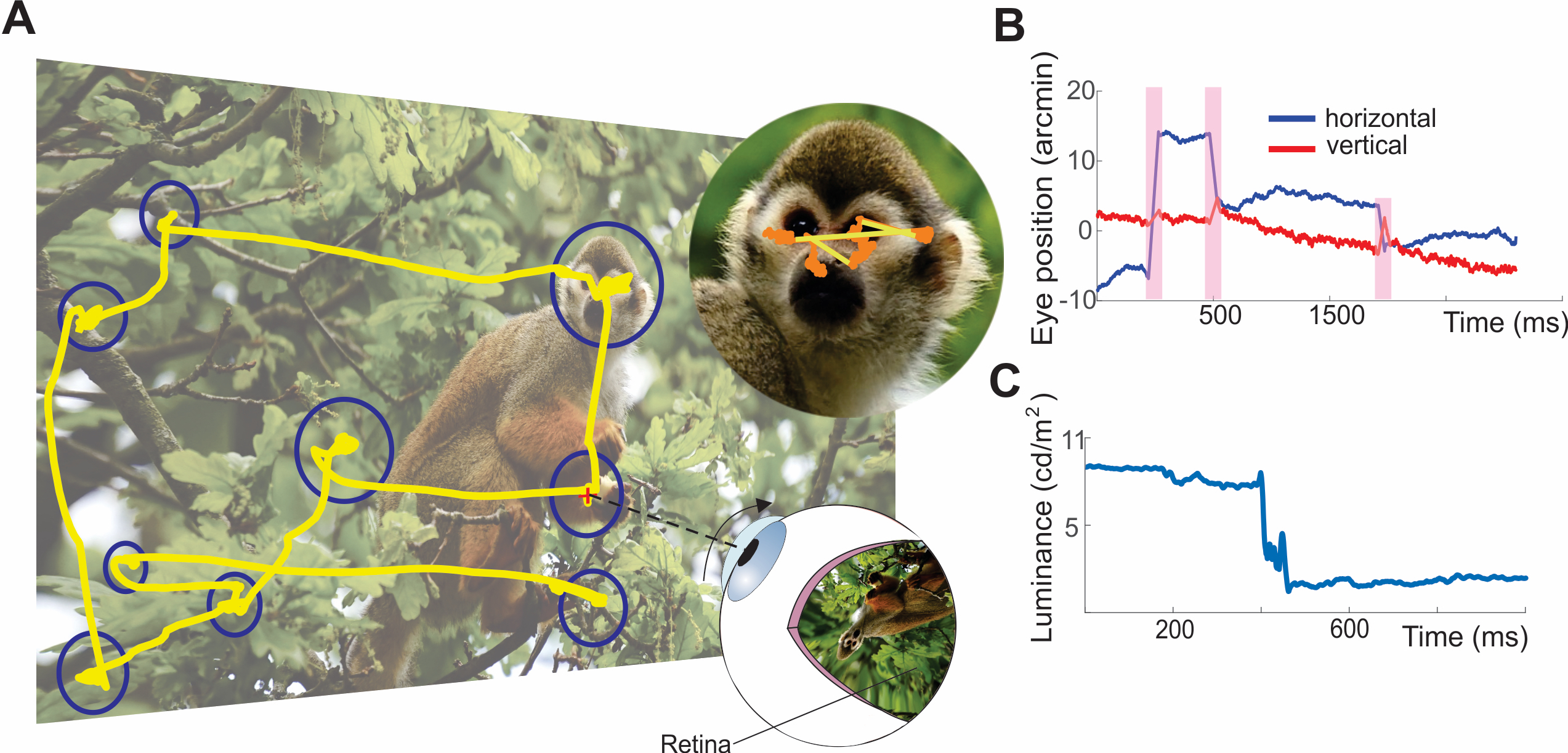

But the eye does not behave like a camera. While a photographer usually takes great care to ensure that the camera does not move, the eyes move incessantly. Humans perform rapid gaze shifts, known as saccades, two – three times per second. And even though models of the visual system often assume the visual input to be a stationary image during fixational pauses between successive saccades, small eye movements, known as fixational eye movements, continually occur. These movements displace the stimulus by considerable amounts on the retina, therefore continually changing the light signals striking the photoreceptors.

Furthermore, unlike the film in a camera, the visual system depends on temporal transients. Visual percepts tend to fade away in the complete absence of temporal transients, and spatial changes that occur too slowly are not even detected by humans. A well-established finding, preserved across species, is that neurons in the retina, thalamus, and later stages of the visual pathways respond much more strongly to changing than stationary stimuli. These considerations do not appear compatible with the standard idea that space is encoded solely by the position of neurons within spatial maps. They suggest that the visual system combines spatial sampling with temporal processing to extract and encode spatial information.

The main tenet of our theory is that luminance modulations caused by motor activity, eye movements in particular, are the driving engine for visual perception. In the laboratory, neurons are commonly activated by temporally modulating stimuli on the display, either by flashing or moving them, but under natural viewing conditions, the most common cause of temporal modulation on the retina is our own behavior: moving our eyes. Eye movements continually transform stationary spatial scenes into a spatiotemporal luminance flow on the retina. That is, they reformat spatial signals in the joint space-time delay, determining with their characteristics the amount of spatial information that is delivered within the range of spatiotemporal sensitivity of the visual system.

This idea builds on classical theories of dynamic visual acuity, raised several times over the course of over century, the proposal that, rather than being detrimental, eye movements may actually be beneficial for high-acuity vision. The essence of the idea is that spatial information is not lost in the visual signals resulting from eye movements, but converted into modulations that may be extracted via spatio-temporal codes.

Although this concept may at first appear counter-intuitive, objects of interest are often in motion, presenting a problem for purely spatial mechanisms of neural encoding, as the windows of neuronal integration are long enough for even moderate speeds of motion to smear images beyond recognition. Yet this does not happen. Motion perception is often considered as a specialized visual function that relies on dedicated neural machinery, separate from that normally used to process stationary spatial scenes. But although objects may be stationary in space, eye movements keep their projections in continuous motion on the retina. We argue that motion processing is not a special case for vision, but the norm. As there are no stationary retinal signals during natural vision, space-time encoding is the fundamental, basic operating mode of human vision. A more technical way to state this is to say that there is no vision a zero Hz. Vision always occurs at non-zero temporal frequencies, and most of the visual signals in these range derive from our eye movements.

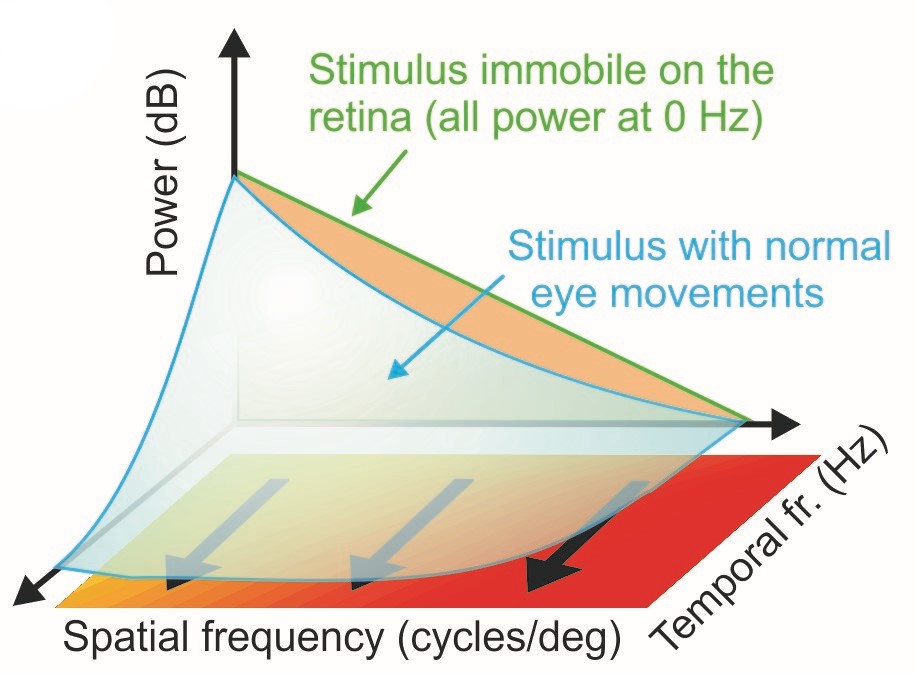

A helpful way to conceptualize and analyze the space-time reformatting resulting from eye movements is by means of the spectral distribution of the luminance flow impinging onto the retina, a representation of the power of the retinal stimulus over spatial frequencies and temporal frequencies. When a static scene is observed with immobile eyes — a situation that never actually occurs under natural conditions — the input to the retina is a static image. Its power is confined to 0 Hz. Eye movements transform this static scene into a spatiotemporal flow, an operation that, in the frequency domain, is equivalent to redistributing the 0 Hz power across nonzero temporal frequencies (blue surface in Fig. 1A).

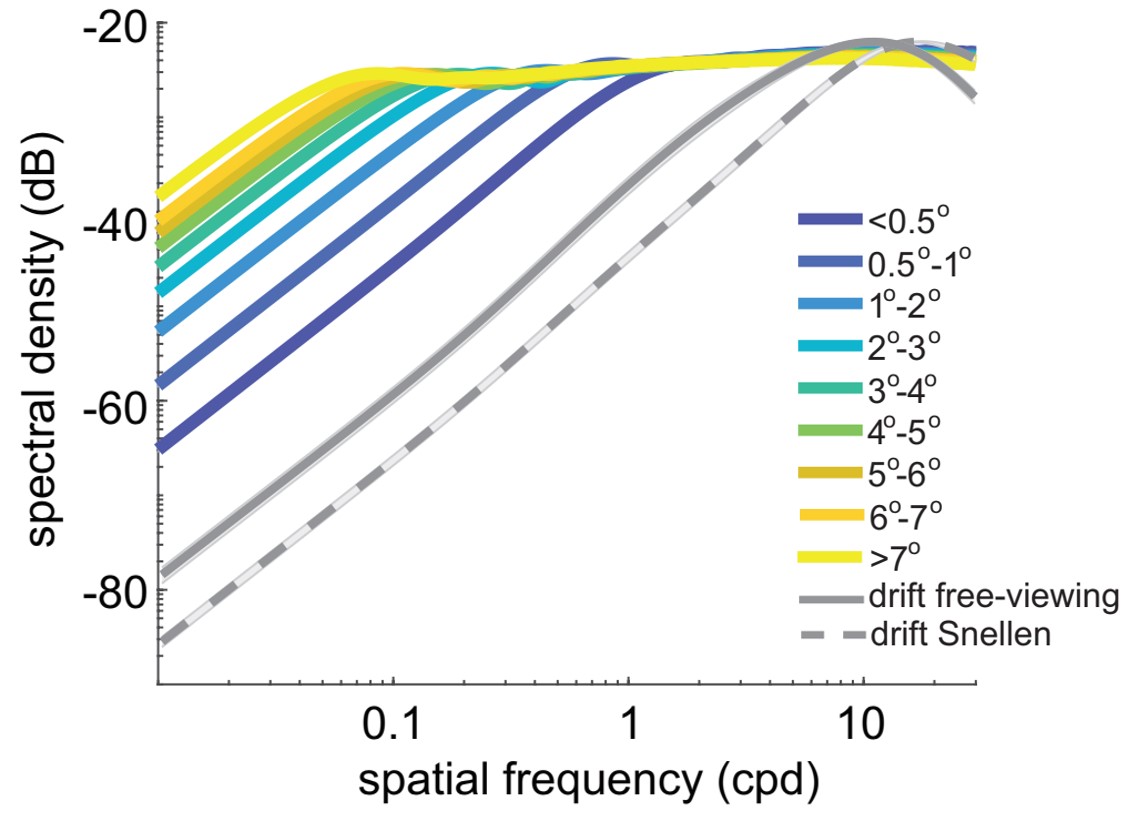

The exact way in which this redistribution occurs depends on the specific characteristics of the eye movements: because of their very different properties, one may imagine that saccades and drifts create signals with different spatiotemporal statistics on the retina. But our work has shown that their luminance modulations differ quantitatively, but not qualitatively, with both types of eye movements yielding modulations that are well matched to the characteristics of the natural visual world. When looking at natural scenes, both saccades and fixational drift equalize spatiotemporal power within a range of spatial frequencies, an effect known as spectral whitening, but the range of whitening varies across eye movements.

Drift-induced retinal modulations depend on the spatial frequency of the stimulus. This happens because, as the eye moves, the luminance signals impinging onto the retina tend to fluctuate rapidly with stimuli at high spatial frequency — stimuli that contain sharp edges and texture — but will remain more constant with smooth, low spatial frequency stimuli.

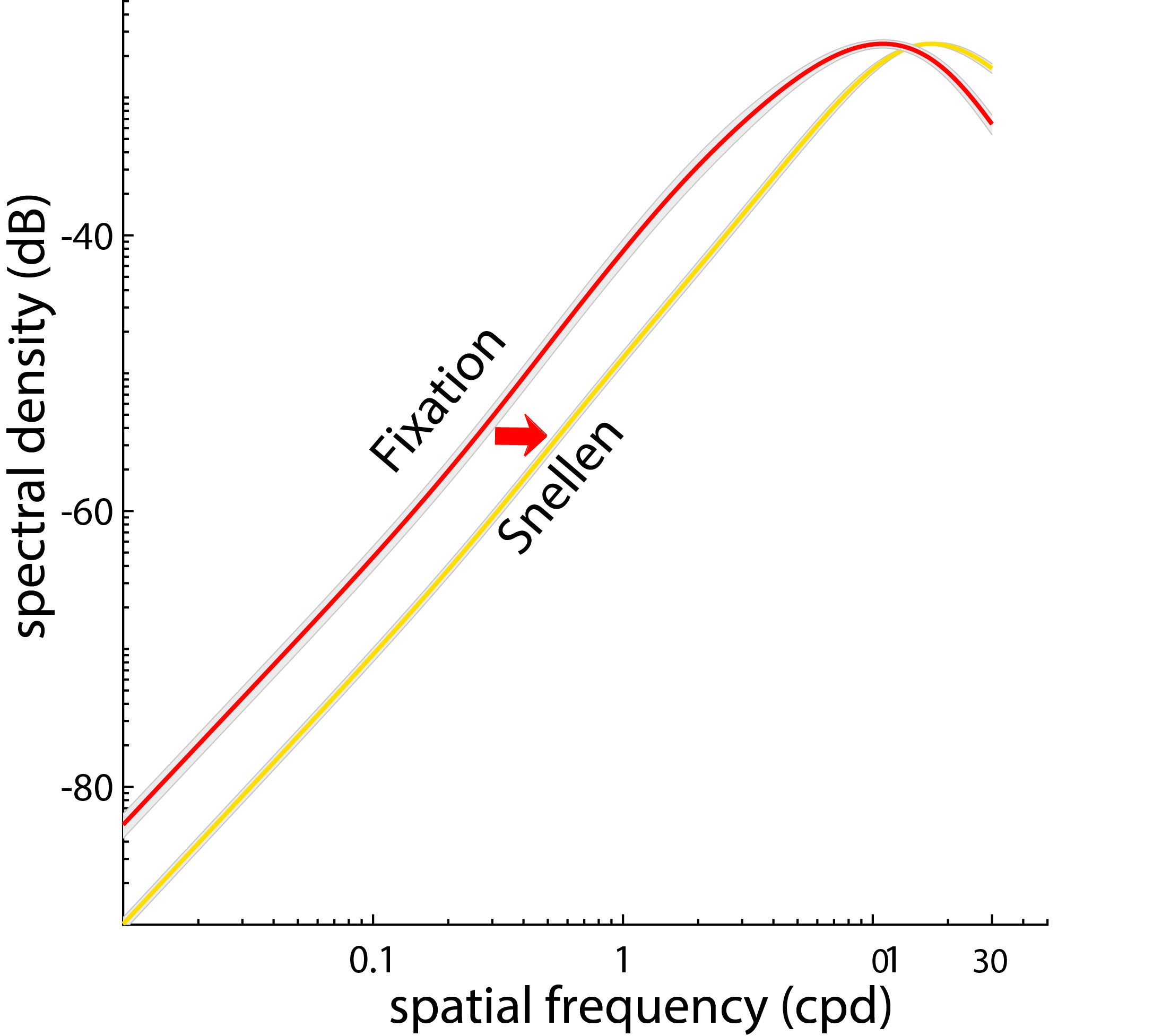

This intuition is quantitatively captured by the figure below, which shows the proportion of spatial power in the otherwise static image (the 0 Hz power) redistributed by drifts to non-zero temporal frequencies. Power increases proportionally with the square of the spatial frequency, up to a limit that depends on the amount of drift. When summing up all temporal sections within the range of human sensitivity, an enhancement can be observed up to 30 cycles/deg, close to the spatial resolution of the photoreceptor array.

The space-time reformatting caused by ocular drift combines with the spectral density of natural images in an interesting way. The spectral power in natural scenes declines approximately with the square of the spatial frequency, resulting in less power at high spatial frequencies, the opposite to the amplification caused by ocular drift. Thus, the motion of the eye enhances the frequency components that have lower power in natural scenes. These two effects counterbalance each other, so that fixational modulations at different spatial frequencies contain approximately equal power up to the range at which the amplification by fixational eye movements begins to be attenuated. The range of whitening depends on the amplitude of ocular drift, increasing for smaller drifts. In sum, ocular drift causes a very specific input reformatting during normal fixation, transforming natural scenes with a spectral density that is heavily biased to low spatial frequencies into luminance fluctuations with equalized spatial power at nonzero temporal frequencies. This is the dominant input signal during the late phase of inter-saccadic fixation, when neurons are no longer affected by the consequences of the preceding saccade.

Under natural conditions, ocular drift does not occur in isolation, but alternates with rapid gaze shifts of various magnitudes, from microsaccades to saccades. These movements position objects of interest on the small high-acuity region of the retina known as the fovea. But in doing so they also produce sharp temporal transients to the retina, which likely drive neuronal responses in the early phase of fixation, immediately following each saccade. Despite the massive impact of saccades at both the perceptual and neural levels, until recently relatively little attention has been paid to the information content of the luminance signals that these movements deliver to the retina. As they relocate gaze, saccades yield complex spatiotemporal modulations that depend on both the dynamics of the movement and the statistics of the visual scene.

We have recently shown that the characteristics of saccades, particularly their dynamics and the velocity-amplitude relation, lead to luminance modulations on the retina that counterbalance the power spectra of natural scenes up to an amplitude-dependent cut-off frequency. The resulting conversion of spatial patterns into temporal signals is similar to that previously observed for inter-saccadic eye drifts, but now compressed in spatial frequency and with greater power at low spatial frequency. The bandwidth of this phenomenon increases for small saccades, with microsaccades approaching the previously reported effects for ocular drift.

Thus, the luminance signals delivered by movements as different as saccades and ocular drifts are part of a continuum. A stereotypical evolution in the bandwidth of whitening continually occurs during the natural alternation between saccades and fixational drifts. Visual responses are driven by a signal that contains strong low-spatial frequency power and narrow whitening bandwidth immediately after a saccade, and stronger high-spatial frequency power and broad whitening later during fixation.

Implications & predictions

Our theory presents a new picture of early visual processing. It replaces the traditional view of the early visual system as a passive encoding stage with the more complex view that neurons in the early pathway are part of an active strategy of visual processing and feature extraction, whose function can only be understood in conjunction with eye movements. It argues that spatial information is not just stored in spatial maps of neural activity, as commonly assumed, but also in the temporal structure of the responses of neuronal ensembles, dynamics critically shaped by eye movements and therefore potentially under task control. It suggests that, rather than an inflexible encoding stage designed to optimize the average transmission of information, the retina and eye movements, together, constitute an adaptive system whose properties can be rapidly adjusted to optimize performance on the specific task. It predicts that eye movements contribute to fundamental properties of spatial vision currently attributed to neural mechanisms alone.

Furthermore, space-time encoding predicts new functions for oculomotor control, as it argues that eye movements are not merely a means to center the high-acuity fovea on the objects of interest. Rather, they appear to play important roles in processing visual information before neural computations take place, by removing broad-scale correlations in natural scenes, enhancing low-frequency vision, and setting the stage for a coarse-to-fine processing dynamics. Several of the predictions of this theory have now been confirmed. This includes the findings that fixational eye movements enhance high spatial frequency vision (Rucci et al., 2007) and that saccades amplify coarse spatial patterns (Boi et al., 2017).

The following articles describe various aspects of the theory and test specific predictions:

- Tuning of ocular drift in a visual acuity test enhances high spatial frequencies (Intoy & Rucci, Nature Communications, 2020)

- The power redistribution resulting from saccades and its relation to the characteristics of natural scenes. The whitening bandwidth oscillates during the saccade-fixation cycle (Zhao et al, Current Biology, 2020).

- Space-time encoding predicts human contrast sensitivity under a variety of conditions (Casile et al, eLife, 2019)

- General considerations of encoding space in time and possible implications for emmetropization (Rucci et al, 2018) (Rucci & Victor, 2018)

- The saccade-fixation cycle establishes a coarse-to-fine perceptual dynamics (Boi et al, Current Biology, 2018)

- The unsteady eye is an information processing stage, not a bug. (Rucci & Victor, Trends in Neuroscience, 2015)

- Spectral whitening caused by ocular drift is preserved during natural head-free viewing of natural scenes (Aytekin et al, Journal of Neuroscience, 2014)

- Temporal modulations from fixational eye drifts are matched to the characteristics of the natural visual world (Kuang et al, Current Biology, 2012)

- A first summary of the theory (Rucci et al, Nature 2007)

- A model of visual dynamics caused by the saccade/fixation cycle (Desbordes & Rucci, Visual Neuroscience 2007)

- Luminance modulations from small eye movements enhance vision of fine spatial details (Rucci et al, Nature 2007)

- Possible developmental consequences of encoding space in time (Rucci et al, 2000; Casile & Rucci, 2008; Casile & Rucci, 2009;)